Buyers don’t discover B2B SaaS the way they did even 18 months ago.

Yes, they still Google. But a growing share of first-touch discovery now happens inside ChatGPT, Gemini, Perplexity and directly in the SERP via Google AI Overviews.

These systems don’t just rank pages. They compose answers: summarize vendors, compare options, and (sometimes) cite a handful of sources.

That’s the shift: your brand can be missing, misrepresented, or overshadowed long before someone lands on your website.

🤙 Prefer done-for-you? Book a call.📖 Want proof this works? Browse our case studies.

This guide turns our LLM/AI visibility audit approach into a repeatable internal workflow (part of Answer Engine Optimization).

You’ll get:

- A repeatable audit workflow (not a one-off “try a few prompts” experiment)

- Which AI surfaces to test (and why results differ)

- A copy/paste prompt pack

- A logging + scoring system (so results don’t live in a random Google Doc)

- How to diagnose issues and build a fix roadmap

If you’re also evaluating tools for tracking this long-term, start here: tools for tracking brand visibility in AI search.

Table of Contents

What “Brand Visibility on LLMs” Actually Means

Brand visibility on LLMs is how often and in what context AI systems mention your brand when users ask category, comparison, and evaluation questions.

The important part is “context.” Visibility isn’t just “are we mentioned?” It’s also:

- Are we recommended or merely listed?

- Where do we show up in a ranked list (top 1–3 vs #9)?

- Do they describe us accurately (features, ICP, pricing model, integrations)?

- Do they cite sources—and are those sources ours, competitors’, or third parties?

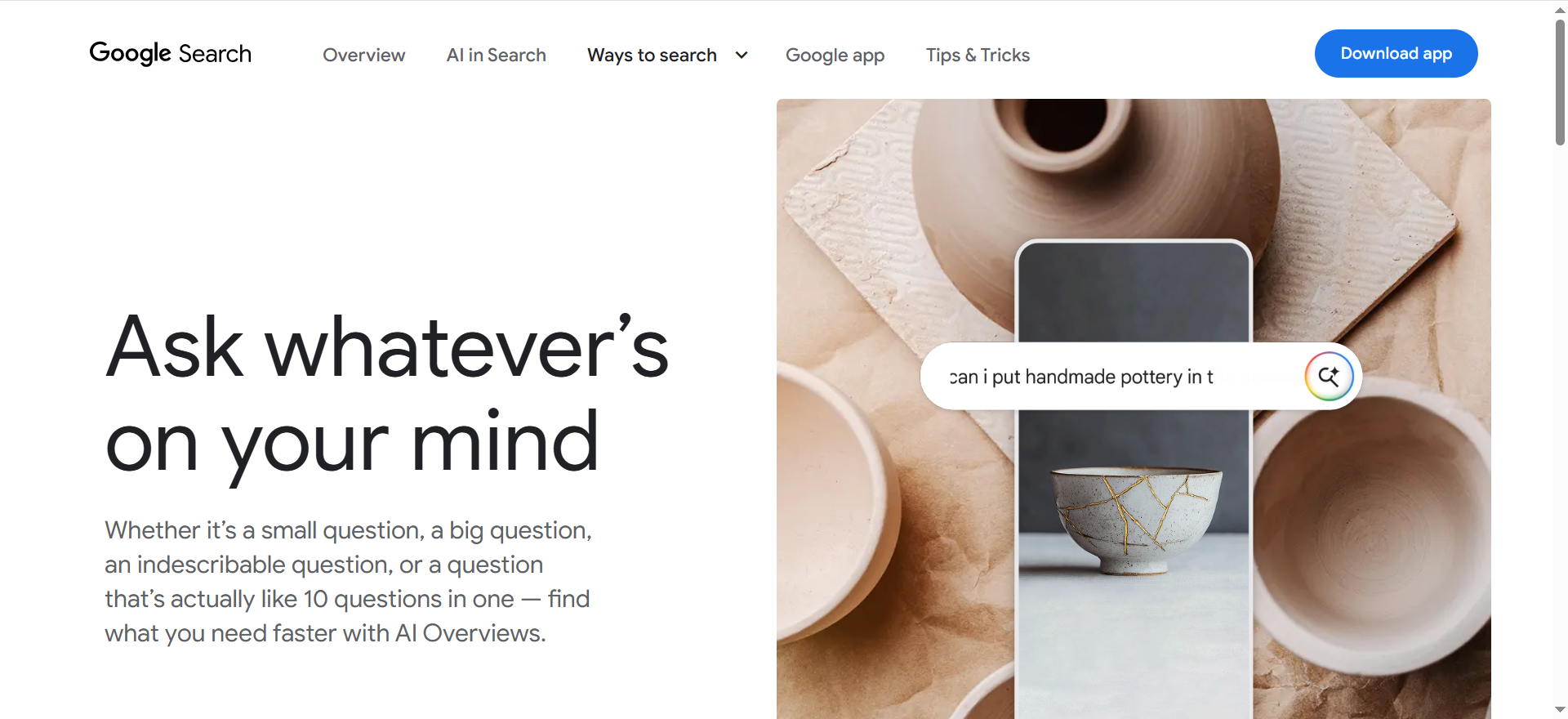

Why this matters: Google AI Overviews explicitly frames itself as a “snapshot” with links to explore more on the web. If your pages never become the cited sources, you’re less likely to benefit from that “explore more” behavior.

And Perplexity positions its experience around “clickable citations” you can verify. That makes citations a practical lever for visibility audits (and improvements).

👉 If you want to structure your content to be easier for AI systems to summarize and cite, see: structuring AEO content for the AI era.

The 5 Metrics That Make this Measurable

Think of these as your “LLM visibility scorecard.” You’ll log and score all five.

- Mention rate: Do you show up at all across non-branded prompts (“best [category] tools”)?

- Recommendation strength: Are you suggested as a solution, or just named in passing?

- Position: If a tool list is provided, where are you?

- Accuracy: Are claims correct? (This is where reputational risk shows up.)

- Citations (where available): Do AI surfaces cite your site or credible sources that support your positioning?

👉 When you’re ready to turn findings into actions that increase mentions/citations, pair this audit with: SEO strategies for AI visibility (and this proof-backed example: GEO case study).

The Surfaces you Should Audit (and Why Outputs Differ)

If you only test one system, your audit will lie to you—because each surface pulls from different sources, shows citations differently (or not at all), and can change based on locale, language, and whether “web/search” is on.

Minimum set (audit all 4): Google AI Overviews/AI Mode, ChatGPT (web on/off), Gemini, Perplexity.

Fast start: grab the audit template from Free Resources and log the same prompt set across all four surfaces.

Want us to run the sprint for you? Book a call

At minimum, audit these four:

1) Google AI Overviews (and AI Mode)

Google AI surfaces can reshape click behavior—so you’re auditing who gets cited and whether you’re part of the “explore more” pathway. If you haven’t seen how this impacts BOFU pages, read our AI Overview SEO BOFU case study.

What you’re checking here

- Does an AI Overview appear for your category + use case queries?

- Which domains get cited?

- Do your pages appear as supporting sources—or do competitors dominate citations?

Screenshot you’ll capture

- The AI Overview panel

- The list of cited links

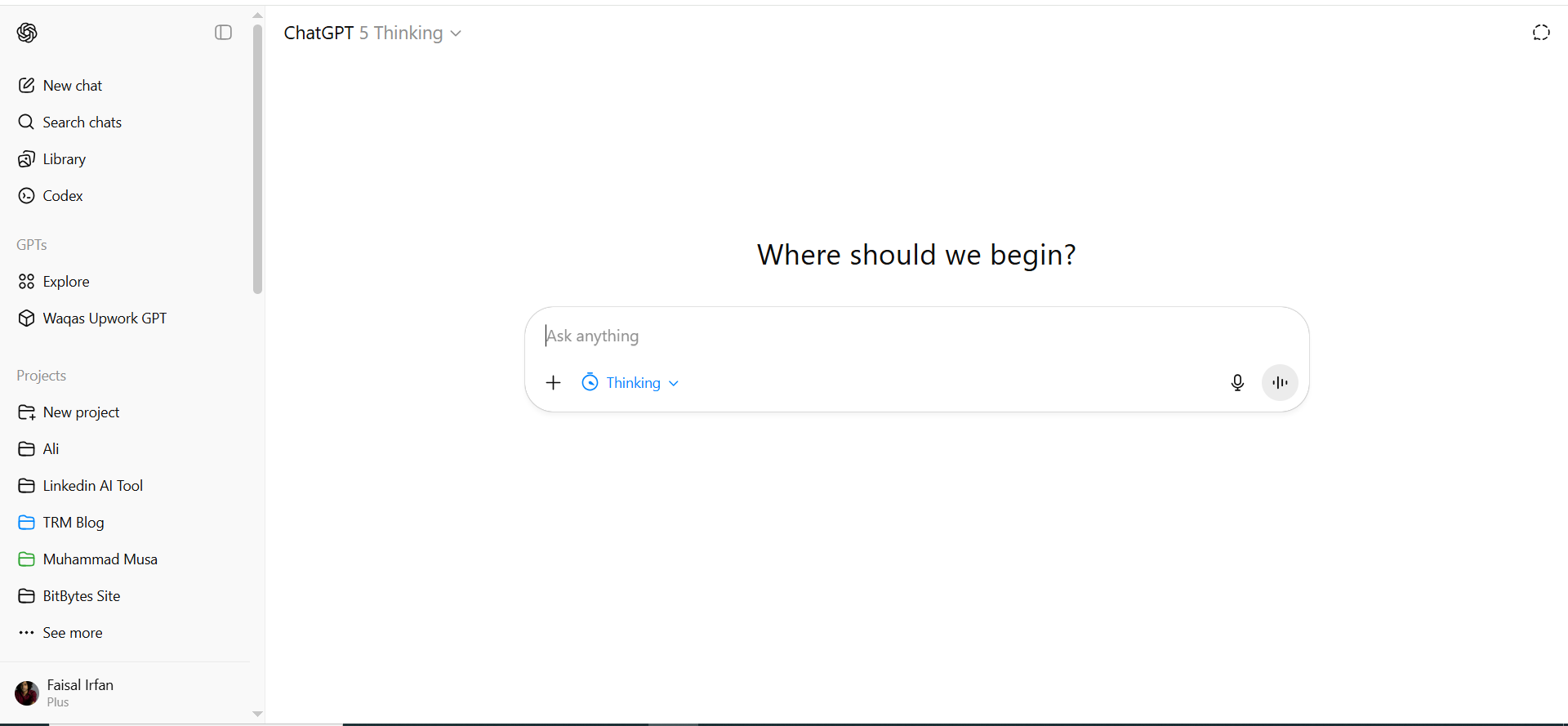

2) ChatGPT (with Web/Search On Vs Off)

In many setups, ChatGPT can operate in a mode that shows sources/citations. Your audit should explicitly note whether you ran each test with web/search enabled, because behavior can change.

What you’re checking

- Your inclusion in category prompts

- How you’re framed vs competitors

- Whether citations appear, and what domains are cited (when shown)

Screenshot you’ll capture

- The answer (full)

- The sources panel (if shown)

3) Gemini

Gemini is a major buyer surface for research and comparisons. Treat it as a peer to ChatGPT in your audit—not an “extra.”

What you’re checking

- Mention + recommendation outcomes for core prompts

- Accuracy of positioning (especially ICP and features)

- Consistency with what you want your “one sentence summary” to be

Screenshot you’ll capture

- The full response

- Any ranked list or side-by-side comparison

4) Perplexity

Perplexity is particularly audit-friendly because it emphasizes citations.

What you’re checking

- Which sources Perplexity uses when it describes your category

- Whether your domain is cited

- Whether competitor pages are the “default references” for your space

Screenshot you’ll capture

- The answer

- Citations expanded

Optional Surfaces (only if Your ICP Uses Them Heavily)

Add Bing Copilot, You.com, or niche vertical AIs if your category is winner-take-most. If you’re including Microsoft surfaces, use this as your internal reference point: Bing AI

The LLM Brand Visibility Audit Workflow

This workflow is designed for repeatability: run it once for a baseline, then repeat monthly/quarterly with the same prompts to track lift. (This is the same mindset as any disciplined audit, just applied to AI answers instead of blue links.)

Step 0: Set Scope so Results are Repeatable

Before you start, decide what “this audit” covers.

At minimum:

- One core category (e.g., “SEO platform,” “email marketing,” “product analytics”)

- One primary ICP (e.g., “B2B SaaS marketing teams,” “PLG startups,” “enterprise”)

- 3–8 primary competitors

- A fixed list of prompts (we’ll build this next)

Now open the template from Free Resources

- Brand name + common abbreviations

- Primary domain

- Category

- ICP

- Regions/locales

- Languages

- Competitors

- Audit date + auditor

Why this matters: if you don’t lock variables, you won’t know whether changes came from your work—or from model updates and environment changes.

Step 1: Build Your Prompt Set (20–50 Prompts) Based on Buyer Intent

Most people approach this backward: they test branded prompts (“What is [Brand]?”) and feel good.

That’s not the real game.

The real visibility question is: Do you show up in non-branded, high-intent prompts where buyers shortlist options?

You’ll build prompts across four buckets:

Bucket A: Discovery Prompts (Top-of-funnel)

These mimic “best tools” searches.

Examples:

- “What are the best [category] tools for [ICP/use case]?”

- “Rank the top [category] platforms for [constraint: budget/team size/region].”

- “What should a [role] look for in a [category] tool?”

Bucket B: Comparison Prompts (Mid-funnel)

These mimic “Brand vs competitor” pages.

💡 Examples:

- “[Brand] vs [Competitor]: which is better for [use case]?”

- “Compare [Brand], [C1], [C2] for [use case].”

Bucket C: Alternatives prompts (Mid-funnel)

These mimic switching behavior (high commercial intent).

💡 Examples:

- “What are the best alternatives to [Competitor]?”

- “If I’m switching from [Competitor], what should I choose?”

Bucket D: Evaluation & Objections Prompts (Bottom-funnel)

These are where accuracy and reputation matter.

💡 Examples:

- “Is [Brand] a good choice for [use case]? Pros/cons.”

- “What are common complaints about [Brand]?”

- “Does [Brand] integrate with [platform]?”

Open the Prompt Library tab in the template and add at least 25 prompts to start.

👉 If you need help choosing prompts that actually map to zero-click / AI answers, use: how to pick keywords for zero-click SERPs.

If you want, TRM can build the prompt set (query fan-out + competitor mapping) and run the audit sprint—book a call.

Step 2: Run Prompts Across Each Surface (Use a Clean Testing Protocol)

This is where audits get sloppy. Don’t improvise. Use a fixed protocol.

Your testing protocol

For each prompt:

- Start a new session (new chat, private window, or equivalent).

- Confirm your locale/language.

- Note whether the surface is Web/Search On or Off (when applicable).

- Paste the prompt exactly (copy/paste—no edits).

- Capture the output + citations (if shown).

- Log it immediately.

What “capture evidence” means

At minimum:

- screenshot of full answer

- screenshot of citations list (where available)

- conversation link (if the tool supports share links)

- date/time

Why evidence matters: AI outputs drift. You need proof of what was said on that date in that mode.

👉 If you’re tightening credibility signals on your site to improve how you get summarized/cited, see: Google experience & evidence.

Step 3: Log Results (so You Can Score and Compare)

Open the Run Log tab and fill one row per prompt per surface.

Log these fields (the template already includes them):

- Date, auditor

- Surface (ChatGPT / Gemini / Perplexity / Google AI Overviews)

- Mode (Web/No Web)

- Locale + model/version (if you can see it)

- Prompt ID + prompt text

- Response snippet (short)

- Brand mention (Y/N)

- Mention type (Primary/Secondary/None)

- Position (1 = top)

- Sentiment (-2 to +2)

- Accuracy (0–2)

- Cited? (Y/N) + cited URLs

- Competitors mentioned

- Issue notes

- Screenshot/evidence link

💡 Example: a “good” log entry (what it looks like)

Let’s say your prompt is:

“What are the best SEO platforms for B2B SaaS teams?”

A strong entry might look like:

- Brand Mention: Y

- Mention Type: Primary

- Position: 2

- Sentiment: +1

- Accuracy: 2

- Cited? Y (your domain included)

A weak entry might look like:

- Brand Mention: N

- Competitors Mentioned: 5 competitors

- Notes: Competitor X listed #1 with a strong recommendation

Step 4: Score Each Prompt (Your Visibility Scorecard)

You’re not scoring for fun. You’re scoring so you can prioritize fixes.

A simple scoring model should reward:

- being included

- being recommended

- being near the top (think average position logic)

- being accurate

- being cited (where possible)

Here’s a practical rubric (and it matches the template’s Scoring tab):

| Metric | What it measures | Scale | Suggested weight |

|---|---|---|---|

| Mention | Did the model mention your brand? | 0/1 | 35% |

| Mention Type | Primary vs secondary mention | 0–2 | 15% |

| Position | Rank in list (1 = top) | 1–10 | 15% |

| Sentiment | Negative → positive tone | -2 to +2 | 10% |

| Accuracy | Correctness of claims | 0–2 | 20% |

| Citations | Any citations shown / your domain present | 0/1 | 5% |

You don’t need perfect math. You need consistency—and weights that reflect revenue impact.

Step 5: Identify Issues (Patterns Explain “Why” You’re Missing)

Once you’ve run 20–50 prompts, patterns show up fast. Use an issue tracker that captures:

- Issue type

- What happened

- Why it matters

- Suggested fix

- Evidence link

- Priority (P0–P3)

👉 If your fixes involve building “citation-friendly clarity” pages (comparisons, alternatives, crisp definitions), these two guides help: Structuring AEO content for the AI era and AEO-ready SaaS blogs guide

👉 And if you want a broader improvement plan after the audit, use: best SEO strategies for AI visibility

The 7 most common LLM visibility issues (and What They Usually Mean)

▶️ Issue 1: You’re Missing From Category Lists

You’re not part of the “default shortlist” for your category.

Common causes:

- weak category association on your site (“we do everything” messaging)

- no strong “best for” framing

- competitors have more comparison/roundup coverage

Fix roadmap (highest ROI)

👉 Rebuild your category + “best for” clarity using Structuring AEO content for the AI era.

👉 Turn TOFU visibility into commercial outcomes with best SEO strategies for AI visibility.

▶️ Issue 2: You’re Mentioned but not Recommended

You appear in lists but the narrative is lukewarm.

Common causes:

- generic positioning

- unclear differentiation

- benefits described without proof (case studies, benchmarks, credible citations)

Fix roadmap

- Add proof buyers trust via case studies.

- Strengthen credibility signals using Google experience & evidence and author expertise for SaaS blogs.

▶️ Issue 3: Competitors Dominate Comparisons

Competitors appear as the “obvious pick” or default choice.

Common causes:

- they have more “vs” content (even if it’s not great)

- they have stronger third-party coverage

- they’re easier to summarize in one sentence (clear entity)

Fix roadmap

- Build comparison pages that are easy to cite with Structuring AEO content for the AI era.

- Use AEO-ready SaaS blogs guide as your publishing blueprint.

▶️ Issue 4: The Model Gets Key Facts Wrong

This is the one that can quietly kill trust.

Common causes:

- outdated pricing/features pages

- inconsistent messaging across site, docs, listings, and reviews

- missing “canonical” explanation pages (pricing model, integrations, use cases)

Fix roadmap

- Run a cleanup sprint with SaaS content audit + fix sprint.

- Reinforce trust using E-E-A-T plus the context from does E-E-A-T still matter after SGE?

▶️ Issue 5: Citations Never Include Your Site

This matters more in citation-forward surfaces like Perplexity and Google AI Overviews, where links are explicitly part of the experience.

Common causes:

- your best “answer pages” don’t exist (no clear definitions, comparisons, how-tos)

- competitor pages are more citation-friendly (clear structure, direct answers)

- you’re absent from third-party sources that keep getting cited

Fix roadmap

- Build “citation-friendly clarity” pages with AE SEO playbook for AI answers + citation.

- Add structured Q&A where it fits using FAQ schema (and keep your supporting references clean so you can earn more citations).

▶️ Issue 6: You Only Show Up For One ICP/Use Case

You’re visible for “enterprise,” invisible for “startup,” or vice versa.

Common cause:

- your site only speaks clearly to one persona and uses vague language elsewhere

Fix roadmap

- Expand persona/use-case coverage with best SaaS keyword research workflow and how to pick keywords for zero-click SERPs.

▶️ Issue 7: Visibility Varies Wildly Across Surfaces

Normal. Your audit isn’t to force identical answers—it’s to find gaps and improve overall outcomes.

Fix roadmap

- Track lift over time using tools for tracking brand visibility in AI search.

- Link back to your main guide with audit brand visibility on LLMs.

Copy/Paste Prompt Pack (25 prompts)

Replace bracketed text and reuse the same pack across surfaces.

Discovery (Top-of-funnel)

- What are the best [category] tools for [ICP]?

- What is the best [category] platform for [use case]?

- Rank the top [category] tools for [use case] and explain why.

- What should a [role] look for in a [category] tool?

- What are the best [category] tools for [constraint: budget/team size/region]?

Comparison (Mid-funnel)

- [Brand] vs [Competitor]: which is better for [use case]?

- Compare [Brand], [C1], [C2] for [use case] (pros/cons).

- Which is easier to implement: [Brand] or [Competitor]?

- Which is better for integrations: [Brand] or [Competitor]?

- Which has better support for [ICP]: [Brand] or [Competitor]?

Alternatives (Mid-funnel)

- What are the best alternatives to [Brand] for [use case]?

- What are the best alternatives to [Competitor]?

- If I’m switching from [Competitor], what should I choose and why?

- What’s the best [category] tool if I don’t want [Competitor]?

- Which tools are most similar to [Brand]?

Evaluation & Objections (Bottom-funnel)

- Is [Brand] a good choice for [use case]? Pros/cons.

- What are common complaints about [Brand]?

- Does [Brand] integrate with [platform]? How?

- Is [Brand] better for [ICP] or [ICP2]?

- What’s the pricing model for [Brand] (high-level)?

Source-Pressure Prompts (Helps With Citations)

- Cite sources: What is [Brand] and who is it for?

- What sources support recommending [Brand] for [use case]?

- Which pages should I read to evaluate [Brand] vs [Competitor]?

- If you had to summarize [Brand] in one sentence, what would you say?

- What is [Brand] best known for?

How to Run this Audit on Each Surface (with Screenshot Checklist)

Below is the exact “how-to” you can follow (and what to screenshot) so your audit is repeatable, not vibes-based.

▶️ Use the template: grab it from Free Resources.

👉 If you want a done-for-you sprint: book a call.

👉 If you want the full guide context: audit brand visibility on LLMs.

Screenshot Checklist (do This For Every Prompt)

Save these 3 items per surface, per prompt:

- Screenshot of the full answer (no cropping)

- Screenshot of citations / sources (if shown)

- Share link (if the tool supports it) pasted into the Run Log

▶️ ChatGPT (Search/Web ON)

- Start a new chat.

- Confirm Search/Web is enabled (if available).

- Paste Prompt ID (e.g., P-001).

- After the response, open the sources/citations panel (if shown

- Save:

- screenshot of full answer

- screenshot of sources

- paste share link (if available) into Run Log

▶️ ChatGPT (Search/Web OFF)

Repeat the same prompt with Search/Web disabled. You’re testing “model-only” mention and narrative.

▶️ Gemini

- Start a clean session.

- Paste prompt exactly.

- Capture full output (don’t crop the ranking list).

- Log mention type, position, accuracy.

▶️ Perplexity

- Paste prompt.

- Expand citations (this is the point of the product)

- Capture answer + citations expanded.

- Log cited domains.

▶️ Google AI Overviews

- Search your query (category + ICP/use case).

- If AI Overview appears, open cited links. Google describes AI Overviews as a snapshot with links to explore more.

- Screenshot the overview and citations.

- Log which domains are cited and whether your pages appear.

▶️ If you want a real-world example of how this impacts BOFU outcomes, link readers to AI Overview SEO BOFU case study.

Turning Results into a Decision-Ready Report

Your visibility audit shouldn’t end with “interesting findings.” It should end with decisions.

Here’s the reporting structure that works well for SaaS teams:

1) Visibility Scorecard (What Changed, Where you Win/Lose)

Summarize:

- mention rate by surface

- average score by surface

- average score by funnel stage

- competitor dominance (which competitor appears most often)

If you want to keep this lightweight, one table can do most of the work:

| Surface | Mention Rate | Avg Score | Biggest Gap |

|---|---|---|---|

| ChatGPT (Web On) | 32% | 0.41 | Missing from discovery prompts |

| Gemini | 28% | 0.38 | Weak differentiation in comparisons |

| Perplexity | 46% | 0.52 | Citations favor competitor review sites |

| Google AI Overviews | 18% | — | Not cited in AI Overview sources |

💡 Tip: When a surface provides ranked lists, track average position for your brand vs competitors.

2) Accuracy + Risk Report (what AI Gets Wrong About You)

List the top inaccuracies and link evidence.

If a claim is materially wrong (pricing, compliance, security, integrations), that’s typically P0.

3) “Sources to Win” List (Citation Targets)

Pull domains that appear repeatedly in citations (especially Perplexity + Google AI surfaces). Those domains become targets for:

Those domains are your targets for:

- PR placements

- review profiles

- guest data contributions

- partnership pages

- comparative content

👉 If you don’t want to do this manually forever, add a link for readers who want tooling: tools for tracking brand visibility in AI search.

Next steps: The 30/60/90-Day Roadmap to Improve LLM Visibility

This roadmap is intentionally opinionated: most brands waste time “optimizing prompts” instead of fixing the real inputs (content, positioning, and authority).

Days 0–30: Fix Your “Entity Clarity” Foundation

Your goal in the first month is to become easy to summarize accurately.

Prioritize pages that answer:

- What is [Brand]?

- Who is it for (and who is it not for)?

- What is it best for (clear use cases)?

- What makes it different (sharp differentiation)?

- Proof (results + case studies)

Practical checklist:

- Update homepage positioning to a single clear category + “best for” line

- Create/refresh: pricing explanation, integrations hub, use cases, docs “getting started”

- Add definition blocks + FAQs (use FAQ schema where it fits)

- Ensure internal links connect: product → use case → comparison → docs

👉 If this feels like a lot, point readers to the service: SaaS content audit + fix sprint.

Days 31–60: Publish Money Pages (Comparisons + Alternatives)

This is where most of your “recommended” outcomes come from.

Ship:

- [Brand] vs [Competitor] (one page per serious competitor)

- Best [Competitor] alternatives

- Best [category] tools for [ICP] (honest, structured, useful)

The key is not volume. It’s “citation-friendly clarity”:

- short paragraphs

- direct answers

- pros/cons

- clear use-case fit

- transparent comparison criteria

Days 61–90: Win Citations (The Multiplier)

Citations are leveraged in experiences that show sources (Perplexity, and Google AI surfaces that link out).

Your 90-day goal is to increase the number of credible places where your brand is:

- mentioned in category context

- compared fairly to competitors

- tied to a specific “best for” narrative

Tactics that work well for SaaS:

- publish a small data study or benchmark others can cite

- earn inclusion in high-quality roundups and tool stacks

- improve review ecosystem presence (credible, consistent messaging)

- collaborate with partners on co-marketed “integration” pages

▶️ Get the template from Free Resources. Or skip the manual work and book a call for a done-for-you audit sprint.

Frequently Asked Questions

It’s a structured process to measure whether AI systems mention your brand, recommend it, describe it accurately, and cite credible sources. It’s like an SEO audit in discipline, but focused on AI-generated answers rather than blue-link rankings.

At minimum: Google AI Overviews/AI Mode, ChatGPT, Gemini, and Perplexity. Google AI Overviews are designed as snapshots with links to explore more, and Perplexity emphasizes clickable citations—both make source visibility especially important.

Start with 20–50 prompts split across discovery, comparison, alternatives, and evaluation. That’s enough to reveal patterns (missing category visibility, competitor dominance, inaccurate claims) without turning it into a research project.

Models and retrieval systems update, sources on the web change, and results can vary by locale, language, and session context. That’s why your audit must lock variables and capture evidence.

Log the output with screenshots, identify what’s outdated or inconsistent across your site and third-party profiles, then publish clear “canonical” pages (pricing, integrations, use cases) and reinforce accurate messaging through credible sources.

Yes—especially on surfaces that show sources. Google AI Overviews are explicitly presented with links to explore more, and Perplexity highlights clickable citations as a core feature.

Yes. This playbook is designed for manual auditing. Paid tools can speed up monitoring, but the core advantage is the repeatable workflow: prompts → logs → scores → issues → fixes → retest.

Want Us to Run This For You?

If you don’t have bandwidth to run prompts across multiple AI surfaces, log evidence, score outcomes, and turn it into a prioritized roadmap, TRM can run a done-for-you AI Visibility / LLM audit sprint.

You’ll get:

- a customized prompt set for your category + ICP

- multi-surface testing (ChatGPT, Gemini, Perplexity, Google AI Overviews)

- scored visibility report + issue tracker

- a 30/60/90 roadmap tied to revenue intent

🤙 Book a call at The Rank Masters

✉️ Tool vendors / partners: email info@therankmasters.com